Meta’s AI image generator is coming under fire for its apparent struggles to create images of couples or friends from different racial backgrounds.

When prompted by CNN on Thursday morning to create a picture of an Asian man with a White wife, the tool spit out a slew of images of an East Asian man with an East Asian woman. The same thing happened when prompted by CNN to create an image of an Asian woman with a White husband.

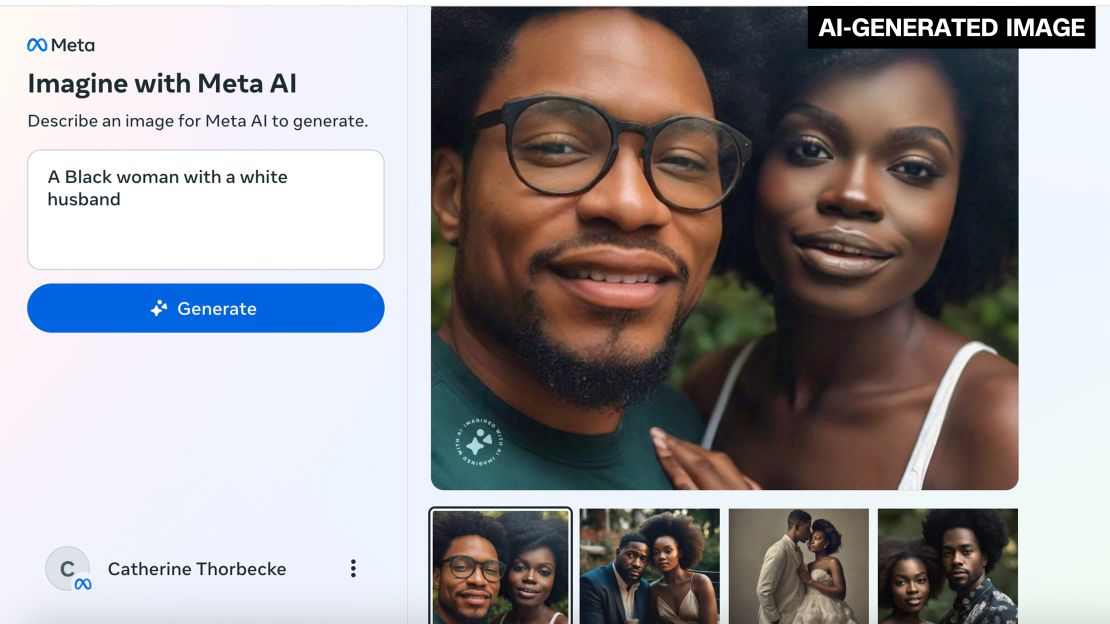

When asked to create an image of a Black woman with a White husband, Meta’s AI feature generated a handful of images featuring Black couples.

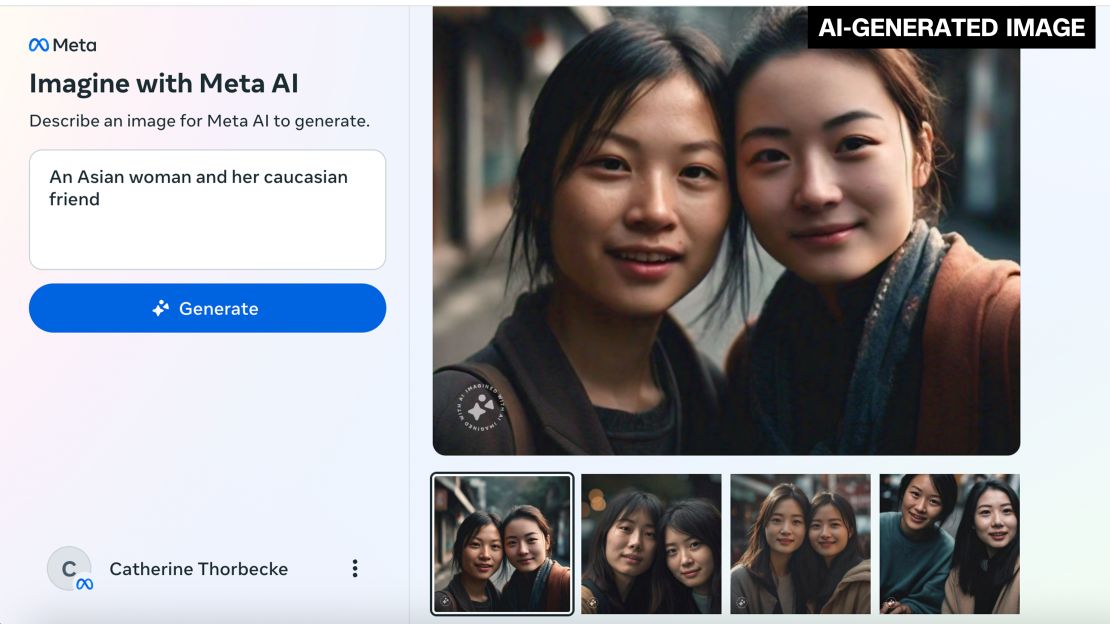

The tool also generated images of two Asian women when asked to create an image of an Asian woman and her Caucasian friend. Moreover, a request for an image of a Black woman and her Asian friend resulted in snaps of two Black women.

However, when asked to create an image of a Black Jewish man and his Asian wife, it generated a photo of a Black man wearing a yarmulke and an Asian woman. And after many repeated attempts by CNN to try and get a picture of an interracial couple, the tool did eventually create an image of a White man with a Black woman and of a White man with an Asian woman.

Meta released its AI image generator in December. But tech news outlet The Verge first reported the issue with race on Wednesday, highlighting how the tool “can’t imagine” an Asian man with a White woman.

When asked by CNN to simply generate an image of an interracial couple, meanwhile, the Meta tool responded with: “This image can’t be generated. Please try something else.”

Interracial couples in America, however, are a huge portion of the population. Approximately 19% of married opposite-sex couples were interracial in 2022, according to US Census data, with nearly 29% of opposite-sex unmarried couple households being interracial. And some 31% of married same-sex couples in 2022 were interracial.

Meta referred CNN’s request for comment to a September company blog post on building generative AI features responsibly. “We’re taking steps to reduce bias. Addressing potential bias in generative AI systems is a new area of research,” the blog post states. “As with other AI models, having more people use the features and share feedback can help us refine our approach.”

Meta’s AI image generator also has a disclaimer stating that images generated by AI “may be inaccurate or inappropriate.”

Despite the many promises of generative AI’s future potential emanating from the tech industry, the gaffes from Meta’s AI image generator are the latest in a spate of incidents that show how generative AI tools still struggle immensely with the concept of race.

Earlier this year, Google said it was pausing its AI tool Gemini’s ability to generate images of people after it was blasted on social media for producing historically inaccurate images that largely showed people of color in place of White people. OpenAI’s Dall-E image generator, meanwhile, has taken heat for perpetuating harmful racial and ethnic stereotypes.

Generative AI tools like the ones created by Meta, Google and OpenAI are trained on vast troves of online data, and researchers have long warned that they have the potential to replicate the racial biases baked into that information but at a much larger scale.

While seemingly well-intended, some of the recent attempts by tech giants to overcome this issue have embarrassingly backfired, revealing how AI tools might not be ready for prime time.

Read the full article here