Microsoft (NASDAQ:MSFT) is considered one of the leaders in this AI race, ever since it struck gold with its investment in OpenAI paying off. Microsoft Azure has been taking market share from rivals as it is the only cloud service provider [CSP] with exclusive access to the most popular closed-source Large Language Model [LLM] at the moment, the GPT series from OpenAI.

Nonetheless, in the previous article, I covered how open-source models are catching up in performance capabilities to closed-source models like the GPT series. We discussed how an increasing number of enterprises are gaining a preference for open-source models, given the more cost-effective and transparent nature of these LLMs better fitting companies’ deployment strategies. As a result, I did not feel MSFT deserved the premium valuation, and downgraded the stock to a ‘hold’ rating. The stock is down over 10% since the publication of that article.

However, I recently decided to sell all my MSFT shares, and I am downgrading the stock to a ‘sell’ rating, given the intensifying threat from open-source, with rivals like Amazon’s (AMZN) AWS and Nvidia (NVDA) leveraging the power of open-source to undermine Microsoft Azure’s future growth potential.

Open-source is becoming a force to be reckoned with

Perhaps the biggest force to be reckoned with in the open-source space is Meta Platforms (META), which decided to open-source its latest, most powerful Llama 3.1 model on 23rd July 2024. And in an open-letter accompanying the release of the model, CEO Mark Zuckerberg pulled no punches against the CSPs boasting exclusive closed-source models, particularly the Microsoft-OpenAI duo:

a key difference between Meta and closed model providers is that selling access to AI models isn’t our business model. That means openly releasing Llama doesn’t undercut our revenue, sustainability, or ability to invest in research like it does for closed providers.

…

Developers can run inference on Llama 3.1 405B on their own infra at roughly 50% the cost of using closed models like GPT-4o, for both user-facing and offline inference tasks.

While Microsoft Azure is one of the cloud providers offering customers access to Meta’s Llama 3.1, it is also available through other platforms like AWS and Google Cloud. So there is no exclusivity advantage here that would enable Azure to continue taking market share from rivals. Furthermore, as Zuckerberg explicitly stated, Llama 3.1 is more cost-effective than OpenAI’s GPT-4o, particularly when enterprises run it “on their own infra”, referring to on-premises data centers, which we will cover later in the article as part of Nvidia’s offense strategy.

In a blog post at the end of August, Meta disclosed statistics reflecting the growth of Llama usage, including among cloud customers:

Llama models are approaching 350 million downloads to date (more than 10x the downloads compared to this time last year), and they were downloaded more than 20 million times in the last month alone, making Llama the leading open source model family.

Llama usage by token volume across our major cloud service provider partners has more than doubled in just three months from May through July 2024 when we released Llama 3.1.

Monthly usage (token volume) of Llama grew 10x from January to July 2024 for some of our largest cloud service providers.

The growing prevalence of open-source models undermines the value proposition of Microsoft’s Azure OpenAI service going forward, which saw significant engagement during the early days of this AI revolution.

Moreover, while Microsoft Azure has been considered the leading cloud provider in this AI revolution with its OpenAI partnership, AWS had been considered a laggard, in the absence of commensurate, exclusive AI models.

However, in a previous article, we had covered how the tables may finally be turning in Amazon’s favor amid the rising popularity of open-source AI, suggesting that AWS has the opportunity to fight back for market share:

Amazon should strive to offer the highest-quality tools for helping enterprises use/customize open-source models for developing their own applications.

And this is exactly what AWS is now striving to do, with Mark Zuckerberg listing Amazon as one of the companies offering particularly valuable services to help its cloud customers leverage the power of Llama, as per his open letter:

Amazon, Databricks, and NVIDIA are launching full suites of services to support developers fine-tuning and distilling their own models.

Additionally, this was followed by Zuckerberg singling out AWS as a key partner in the rollout of Llama 3.1 on the Q2 2024 Meta Platforms earnings call:

And part of what we are doing is working closely with AWS, I think, especially did great work for this release.

Evidently, AWS is taking advantage of Meta’s open-source strategy, and enterprises’ growing preference for open-source models, to sustain its cloud market leadership position in this new AI era, inhibiting Azure from taking market share any further.

In fact, despite AWS being the largest CSP with a revenue run rate of above $105 billion, Amazon delighted investors last quarter with a faster-than-expected revenue growth rate of 20% for its cloud segment.

In the previous article covering MSFT, I had also discussed research reflecting the fact that most AI model deployments were currently focused on internal use-cases. And as this AI revolution evolves towards businesses deploying generative AI-powered applications that are more customer-facing, it will further encourage enterprises to opt for open-source models over closed-source models, given that open-source code is transparent and customizable, enabling developers to better control the kind of responses its AI-assistants produce.

And it’s not just customer-facing applications that will require underlying code transparency, but reportedly also other industries like medicine and insurance.

An even greater level of transparency than most open-source AI models offer will be necessary when it comes to AI systems for sensitive fields like medicine and insurance, argues Ali Farhadi, CEO of the Allen Institute for Artificial Intelligence.

Furthermore, industries with greater data-security needs due to the sensitive nature of their businesses, such as healthcare and financial services, may also remain averse to migrating to the cloud overall, despite the major cloud providers proclaiming the need to shift to a cloud platform to optimally benefit from the new generative AI technology. The CEOs of Microsoft and Amazon often highlight the massive total addressable markets still ahead for their cloud businesses, with an estimated “90% of the global IT spend is still on premises”.

Although the truth is, Nvidia is making it possible to deploy generative AI without having to migrate to a CSP. It may be difficult for some people to fathom how Nvidia could become a competitor to Microsoft, but in an interview alongside Dell Technologies CEO Michael Dell a few months ago, Nvidia CEO Jensen Huang had explained how they are helping enterprises access generative AI outside of cloud computing platforms:

We want to bring this generative AI capability to every company in the world, and some of them can use it in the cloud. But many of the applications still has to be done on prem. And so in order for us to bring them into the generative AI revolution, we’re going to have to go through a partner, that can help us take this completely new reinvention of a computer, the data center, these AI factories, and help every customer.

In a previous article, we had extensively covered Nvidia’s growing business of building AI factories (which are essentially private AI data centers) for enterprises that want to build and run generative AI applications on-premises. And in fact, it is the availability of open-source models that make it possible to enable the deployment of generative AI on-premises, with Nvidia offering access to open-source LLMs like Llama, Stable Diffusion XL and Mistral.

Therefore, this subdues the need for all businesses to migrate to the cloud, particularly among industries with higher data security needs, further undermining the bull case for MSFT.

Upside risks to bearish thesis

Now despite the rising threat of open-source models undermining the lucrative partnership between Microsoft and OpenAI, and Nvidia undercutting the secular trend of migration to cloud computing platforms by building AI factories on-premises, there are still factors that could continue driving MSFT shares higher.

Moreover, despite Nvidia making it possible to run generative AI applications on-premises, this may not be financially feasible for all companies, given the up-front costs of setting up such AI factories, as well as the subsequent costs of running computations on private servers versus cloud servers.

To put some numbers on this, the average power usage effectiveness (PUE)—a measure of data center efficiency calculated by dividing the total amount of power a facility consumes by the amount used to run the servers—of on-prem data centers was 2-3 vs. around 1.3 for cloud

– Brian Janous, Co-founder of Cloverleaf Infrastructure, Goldman Sachs report

The lower the PUE, the greater the energy efficiency of the data center, subsequently lowering the computation costs. Additionally, the continued rise in energy costs could further discourage enterprises from running generative AI applications privately on-premises, and migrate to public cloud platforms instead.

In fact, Microsoft CEO Satya Nadella trumpeted the growing number of companies migrating to Azure on the Q4 2024 Microsoft earnings call:

We continued to see sustained revenue growth from migrations. Azure Arc is helping customers in every industry, from ABB and Cathay Pacific, to LaLiga, to streamline their cloud migrations. We now have 36,000 Arc customers, up 90% year-over-year… we now have over 60,000 Azure AI customers, up nearly 60% year-over-year, and average spend per customer continues to grow.

Now with regards to the threat from open-source technology, it is worth noting that Azure also offers access to the leading open-source models, and is obviously not just dependent on OpenAI’s LLMs. So in case early deployers of GPT models through the Azure platform decide they want to shift to an open-source model instead, they could still do so without needing to migrate to an alternative cloud provider.

That being said, the absence of exclusivity in the open-source space could slow down the pace at which Microsoft Azure can take market share from rivals like AWS and Google Cloud. Additionally, an increasing number of cloud customers opting for open-source models over Microsoft-OpenAI’s flagship GPT models would reduce the sales revenue opportunity for MSFT.

However, the use of open-source models through Azure would still incur demand for adjacent data management, storage and analytics services, which continues to see healthy growth as per Nadella’s remarks on the last earnings call:

Our Microsoft Intelligent Data Platform provides customers with the broadest capabilities spanning databases, analytics, business intelligence, and governance along with seamless integration with all of our AI services. The number of Azure AI customers also using our data and analytics tools grew nearly 50% year-over-year.

So far, the AI race between the major cloud providers has been centered around the breadth, quality and exclusivity of the models available through their platforms. However, amid the growing preference for open-source models which will be ubiquitously available through all CSPs, the race could shift towards offering the most superior suite of adjacent tools and services that enable cloud customers to optimally customize the models for their specific needs.

As mentioned earlier, AWS has already been making notable leaps in offering the best tools and services that enable enterprises to optimally deploy Meta’s open-source Llama models. So in order for Microsoft Azure to stay competitive, it will need to continuously advance the proficiency of these data management and analytics services, as well as other essential tools for optimal AI model deployment.

Microsoft Financial Performance and Valuation

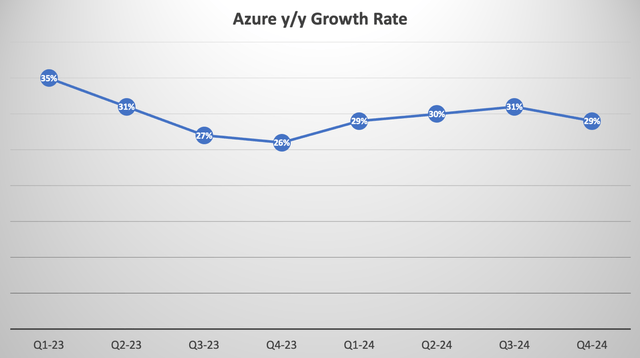

Last quarter, Microsoft Azure revenue grew by 29%, slightly disappointing the market with expectations of 30%+.

Nexus Research, data compiled from company filings

Though as per the discussion between executives and analysts on the earnings call, it was weaker-than-expected growth in non-AI services that was behind the expectations miss. On the generative AI front, demand remained strong and continued to outstrip supply, as per CFO Amy Hood’s remarks:

Azure growth included 8 points from AI services where demand remained higher than our available capacity.

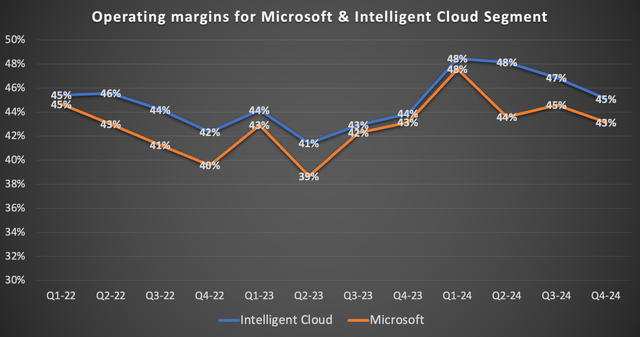

Now in terms of profitability, the Intelligent Cloud segment (consisting of Azure, as well as its traditional Servers business) saw its operating margin drop to 45%, while company-wide operating margin fell to 43%.

Nexus Research, data compiled from company filings

Nonetheless, Microsoft has been impressing the market by sustaining its overall profitability despite the heavy CapEx on AI infrastructure driving depreciation costs higher.

Furthermore, back in October 2023 on the Q1 FY2024 Microsoft earnings call, CEO Satya Nadella shared that:

the approach we have taken is a full stack approach all the way from whether it’s ChatGPT or Bing Chat or all our Copilots, all share the same model. So in some sense, one of the things that we do have is very, very high leverage of the one model that we used — which we trained, and then the one model that we are doing inferencing at scale. And that advantage sort of trickles down all the way to both utilization internally, utilization of third parties

Moreover, in an article following that earnings call, I had explained Nadella’s remarks in a simplified manner to enable investors to better understand how the company has been benefitting from the wide popularity of OpenAI’s LLMs, conducive to scaling advantages:

Multiple applications are being powered by a single large language model [LLM], namely OpenAI’s GPT-4 model. This includes Microsoft’s own applications like Bing Chat and its range of Copilots, as well as third-party developers choosing to use this model to power their own applications through the Azure OpenAI/ Azure AI platforms.

…

It enables Microsoft to scale its AI infrastructure more easily. The prevalent use of a single AI model simplifies the process of infrastructure management, allowing for greater cost efficiencies that are conducive to profit margin expansion. Moreover, the high cost of training the model can be amortized over the multiple AI applications using it, improving the return on investment, as the different revenue streams from the model exceed the cost of training it. These forms of advantages are the “very high leverage” Nadella is referring to in the citation earlier.

As more and more applications run on this single AI model, the greater the scalability of the model becomes, leading to incremental cost efficiencies.

The point is, these cost efficiencies from the wide-scale deployment of the GPT models enable Microsoft to sustain its high profit margins. However, as we discussed earlier in the article, the growing preference for open-source models is likely to moderate demand for OpenAI’s closed-source LLMs going forward. And it’s not just open-source models that are gaining prevalence.

As enterprises across various industries have been experimenting with the new generative AI technologies, businesses are reportedly preferring smaller AI models over the larger models that catapulted Microsoft and OpenAI into leadership positions during the early innings of this AI revolution last year:

Companies are increasingly deploying smaller and midsize generative artificial intelligence models, favoring the scaled down, cost efficient technology over the large, flashy models that made waves in the AI boom’s early days.

…

Chief information officers say that for some of their most common AI use cases, which often involve narrow, repetitive tasks like classifying documents, smaller and midsize models simply make more sense. And because they use less computing power, smaller models can cost less to run.

– The Wall Street Journal

Now the good news is that in response to the growing preference for smaller models, Microsoft introduced its own suite of small language models in April 2024, named “Phi-3”. And on the last earnings call, CEO Nadella proclaimed that these models are already being used by large enterprises:

With Phi-3, we offer a family of powerful, small language models, which are being used by companies like BlackRock, Emirates, Epic, ITC, Navy Federal Credit Union, and others.

Microsoft has made the right move by also offering its own suite of smaller models in response to customer demand, sustaining Azure’s competitiveness against other model providers and supporting top-line growth.

In addition, diversification away from OpenAI was much needed, especially given the start-up’s morally questionable business practices that have resulted in various lawsuits from authors and publishers, claiming that Microsoft and OpenAI are profiting from unethical use of their work. Such dubious business practices by its key partner is one of the reasons I fell out of love with Microsoft stock.

So the fact that the tech giant is now increasingly promoting the use of alternative AI models is certainly a positive development.

However, serving many different smaller models inhibits the scaling advantages that the tech giant currently benefits from, undermining Microsoft’s future profit margin expansion potential.

Microsoft’s head start in the AI race thanks to its OpenAI partnership is one of the key reasons the market assigned a higher valuation multiple to MSFT over other tech giants. Although, as discussed throughout the article, with this edge slowly fading away, it raises the question over whether the stock still deserves to trade at a premium relative to its peers.

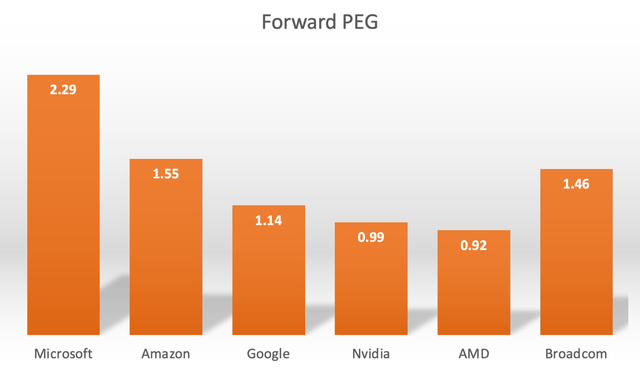

MSFT currently trades at a Forward PE ratio of 30.49x, which may not seem too lofty. But as followers will be aware, I prefer the Forward Price-Earnings-Growth [PEG] multiple as a more comprehensive measure of valuation, as it adjusts the Forward PE ratio by the expected future earnings growth rate.

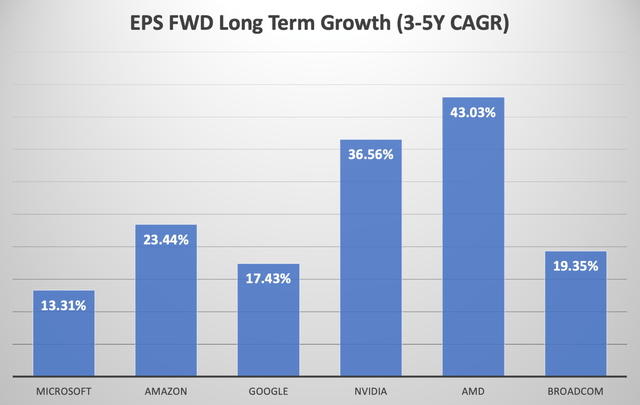

Below are the anticipated EPS growth rates (3-5yr CAGR) for some of the key AI beneficiaries in the semiconductors and cloud computing industries.

Nexus Research, data compiled from Seeking Alpha

Notably, Microsoft has the slowest projected EPS growth rate relative to the other key AI giants, and when adjusting each stock’s Forward PE multiples by their expected earnings growth rates, we derive the following Forward PEG multiples.

Nexus Research, data compiled from Seeking Alpha

A Forward PEG multiple of 2.29x is expensive by itself, and the fact that other tech giants are trading at much more reasonable valuations further undermines the bull case for investing in MSFT.

For context, a Forward PEG of 1x would imply that a stock is trading at fair value. So the king of AI, Nvidia, is essentially trading around fair value, while boasting higher EPS growth prospects. On the other hand, Microsoft’s AI edge through OpenAI is fading amid the rise of open-source and smaller AI models, while Nvidia undermines the need to migrate to the cloud by enabling on-premises generative AI.

All these factors could make it much harder for MSFT to sustain its current valuation, necessitating a further correction in the stock price. I am downgrading MSFT to a ‘Sell’ rating.

Read the full article here