Advanced Micro Devices, Inc. (NASDAQ:AMD) is one of the top semiconductor companies in the world, with a strong foothold in the CPU and GPU markets. Both these chips are a core component for servers used in data centers and businesses. We previously covered how Nvidia Corporation (NVDA) is dominating the data center space, with the company clearly highlighting the 6 types of data center customers it serves. More recently, we determined the effects of Artificial Intelligence, or AI, on Nvidia’s portfolio of chips to discover the numerous enhancing benefits AI brings.

AMD previously highlighted its strategies related to AI, particularly related to its new MI300 GPU chips. In its latest earnings briefing, AMD further emphasized its continued progress in its Data Center GPU business and “significant customer traction” for its next-gen GPUs. At the start of the year, we already highlighted AMD’s continuous GPU performance improvement.

In light of AMD’s new GPU developments of the MI300 chips and “design wins in AI deployments,” we analyze how AI has increased the competitiveness of its server GPUs and where they stand in comparison to Nvidia and Intel (INTC).

|

AMD Revenue Breakdown ($ mln) |

2017 |

2018 |

2019 |

2020 |

2021 |

2022 |

|

Data Center CPU Estimate |

1,153 |

2,939 |

4,131 |

|||

|

Growth % |

155.0% |

40.6% |

||||

|

Data Center GPU Estimate |

532 |

755 |

1,040 |

|||

|

Growth % |

41.8% |

37.7% |

||||

|

Data Center DPU Estimate |

655.2 |

|||||

|

Data Center FPGA Estimate |

217 |

|||||

|

Total Data Center |

1,685 |

3,694 |

6,043 |

|||

|

Growth % |

119.2% |

63.6% |

||||

|

Client |

5,189 |

6,887 |

6,201 |

|||

|

Growth % |

32.7% |

-10.0% |

||||

|

Gaming |

2,746 |

5,607 |

6,805 |

|||

|

Growth % |

104.2% |

21.4% |

||||

|

Embedded |

143 |

246 |

4,552 |

|||

|

Growth % |

72.0% |

1750.4% |

||||

|

Total AMD |

5,253 |

6,475 |

6,731 |

9,763 |

16,434 |

23,601 |

|

Growth % |

23.3% |

4.0% |

45.0% |

68.3% |

43.6% |

Source: Company Data, Khaveen Investments.

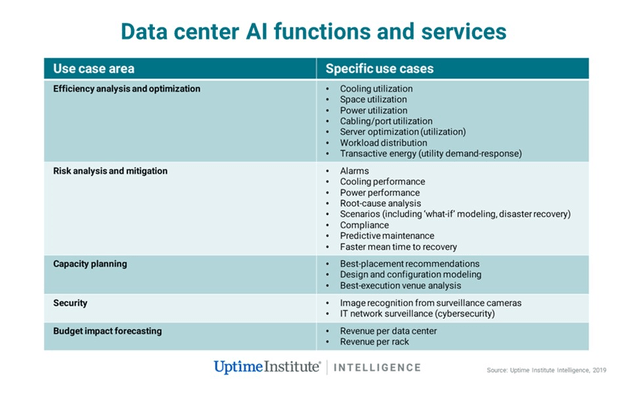

AI Demand Boosts for Data Centers

How AI Makes Data Centers Better

Venture Beat, Uptime Institute

There are numerous ways AI enhances data center operations such as:

- Improved data security,

- Reduced energy consumption,

- Improved predictive maintenance, compliance, and capacity planning

- Improved reliability

- Advanced monitoring

- Server optimization

- Downtime reduction.

The important point to highlight is that AI is a type of technology, and it takes a combination of products and systems to run it to its full potential. Among the most important are chips such as GPUs and CPUs.

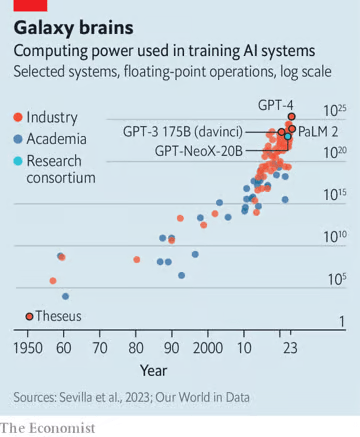

The Economist

AI’s large language models’ capabilities come at a cost – the need for high computing performance. This requirement stems from the extensive size of these models, with parameters in the range of hundreds of billions to trillions. These parameters signify connections and weights in the neural network, facilitating learning from data and performing complex tasks. The vast number of parameters necessitates massive computational power to manage and update them during training and inference.

This is exactly where chips come in:

GPUs (Graphics Processing Units) are used in AI to accelerate the training of deep neural networks. GPUs are designed to perform parallel computations, which makes them ideal for training large neural networks. The parallel architecture of GPUs allows them to perform many calculations simultaneously, which speeds up the training process. – Bing Chat (GPT-4), Microsoft and OpenAI.

CPUs (Central Processing Units) are used in AI for a variety of tasks, including data preprocessing, model training, and inference. In addition, CPUs can be used for running AI algorithms that are not computationally intensive. For example, CPUs can be used for running rule-based systems or decision trees. – Bing Chat (GPT-4), Microsoft and OpenAI.

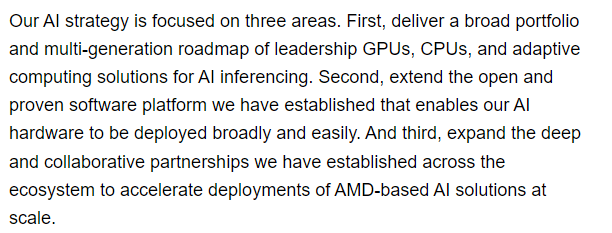

AMD AI Strategy

The pace of innovation in the Instinct GPUs in particular is happening even faster than AMD and its initial customers had anticipated. – The Next Platform.

Chipmakers understand their core importance in AI, and AMD even has a specific AI strategy to capitalize on this.

AMD

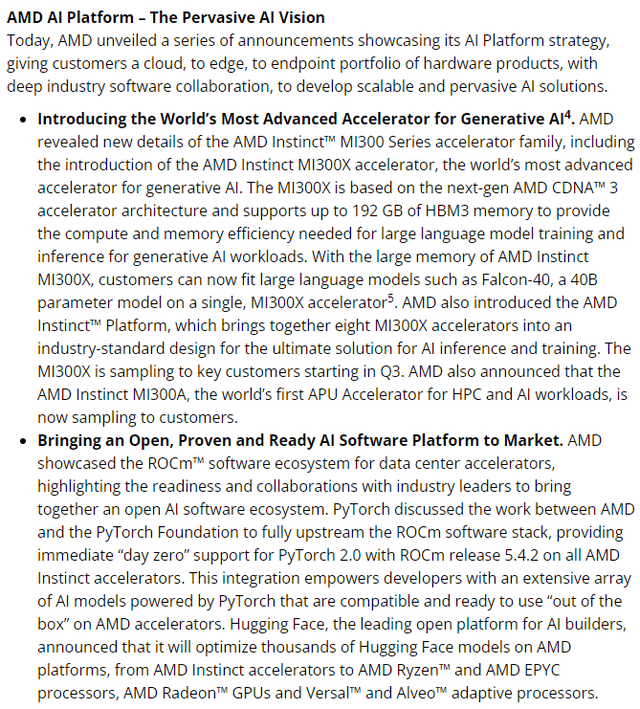

We evaluate the success of AMD’s AI strategy by examining each of the 3 areas it mentions:

- Broad Product Portfolio for AI

- Open software platform for AI

- Established Partnerships.

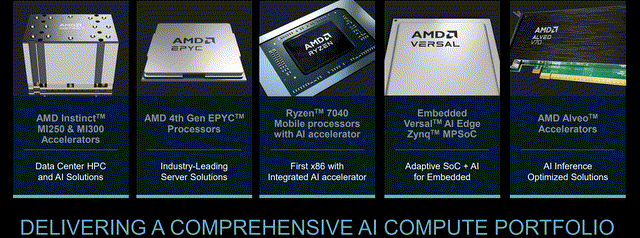

Product Portfolio

AMD has long been established as a leading player in the GPU and CPU space. We also previously discussed AMD’s expansion into FPGAs and DPUs, acquired through its Xilinx and Pensando acquisitions. Thus, we believe the company is indeed executing well in this area.

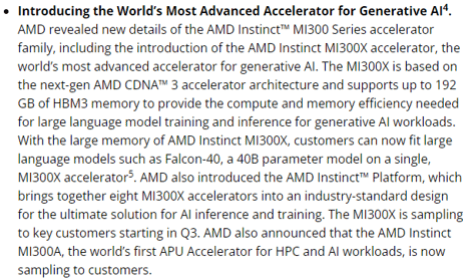

AMD

AMD’s AI portfolio includes its Instinct platform of data center GPUs, EPYC product line of data center CPUs as well as Ryzen PC CPUs. With the acquisition of Xilinx, the company added its Versal ACAP and Alveo FPGA to its AI portfolio. In 2016, AMD first introduced its MI6 GPU as part of its Instinct family which it touted as “training and an inference accelerator for machine intelligence and deep learning.”

AMD

Furthermore, AMD has its Infinity Fabric interconnect technology which facilitates data communication between components such as the CPU and GPU for better performance, reduced latencies and higher power efficiency. It is designed to reduce data movement between storage units in GPUs, CPU cache or system memory “culminating in a coherent CPU + GPU technology that aims to improve system performance (and especially HPC performance) by leaps and bounds.”

Software

The memory requirements of large language models pose a significant challenge as they exceed the capacity of a single GPU or GPU cluster. To tackle this issue, specialized distributed software and hardware configurations are necessary for effective data management during training. This is why good software programs are necessary for AI to operate to its fullest. Some of the software AMD has deployed includes AMD ROCm, PyTorch, Hugging Face, AMD Secure Encrypted Virtualization (SEV) technology and so on.

- AMD ROCm: AMD ROCm is an open-source software platform for GPU computing that provides a set of tools and APIs for programming GPUs, offering flexibility and high performance. It supports various accelerator vendors and architectures, enabling researchers to use AMD Instinct accelerators for scientific and computational tasks.

- PyTorch: A widely regarded open-source machine learning library that offers GPU acceleration and deep learning tools. It can be used with AMD GPUs through AMD ROCm.

- Hugging Face: Hugging Face creates machine learning tools, including the Transformers library for natural language processing. AMD partnered with Hugging Face so its GPUs can be utilized and optimized for Hugging Face’s tools.

- AMD Secure Encrypted Virtualization: AMD Secure Encrypted Virtualization (SEV) is a technology that encrypts the memory of each virtual machine enhancing security.

AMD

Data Center Partners

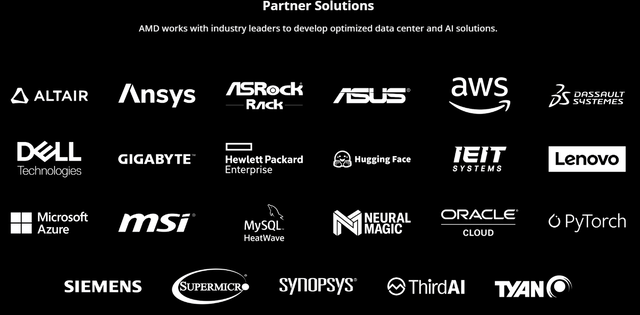

While chips are the core of every technology like the brain of a human, it still needs other hardware components to enable it to carry out the almost limitless number of functions it can do. Just like the brain needs a skull to protect it and limbs to execute its nervous impulses, AMD needs a wide ecosystem of partners to reach the market.

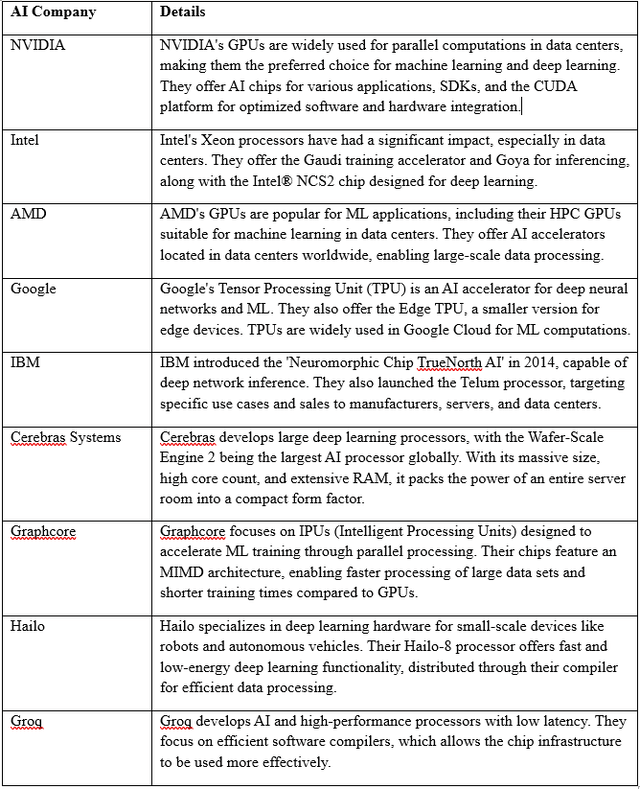

AMD

From the image above, AMD’s partners include cloud providers, server manufacturers and software providers. We believe its partnerships with certain customers are essential. For example, Microsoft (MSFT) and Amazon (AMZN) are among the top 3 cloud players, and Dell (DELL), Lenovo (LNVGY), and Hewlett Packard (HPE) are among the top 5 server makers.

What we find assuring is the numerous partners and customers on this list attesting to the breakthrough technology that AMD’s products bring. The companies highlighting their excitement and commitment to AMD products include Microsoft, Dell, Lenovo and Ericsson (ERIC).

Outlook

As we identified, there are numerous use cases of AI in Data Centers. Data Centers consist of numerous servers that enable the delivery of cloud infrastructure services to the market.

According to Institutional Real Estate, “the average full-scale data center is 100,000 square feet in size and runs around 100,000 servers.”

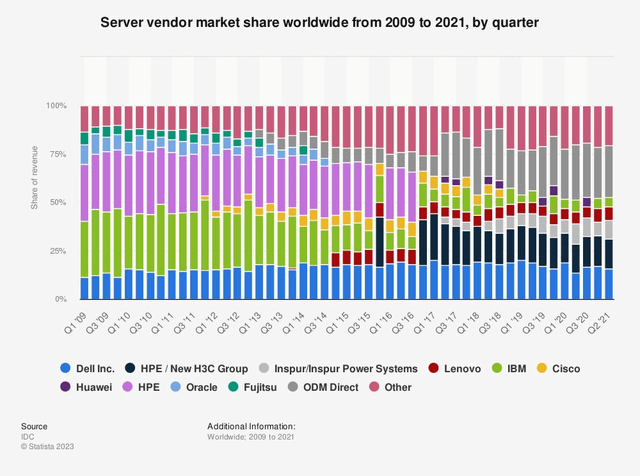

Server Market Share

IDC, Statista

In terms of market share, the top players seem to have maintained their market share over the past few years, but there is a notable increase in sales to direct ODMs.

|

Server Market |

2018 |

2019 |

2020 |

2021 |

2022 |

|

Server Market Shipments (‘mln’) |

11.75 |

11.75 |

12.17 |

13.55 |

14.24 |

|

Growth % |

0.0% |

3.6% |

11.3% |

5.1% |

|

|

Server ASP |

6,769 |

6,635 |

6,389 |

6,138 |

5,960 |

|

Growth % |

-2.0% |

-3.7% |

-3.9% |

-2.9% |

|

|

Market Revenue ($ bln) |

79.53 |

77.96 |

77.75 |

83.17 |

84.87 |

|

Growth % |

-2.0% |

-0.3% |

7.0% |

2.0% |

Source: IDC, Statista, Khaveen Investments.

Based on the chart above, the server market growth has been low over the past 5 years with growth mainly driven by shipment growth rather than ASPs. From our previous analysis of TSMC (TSM), we highlighted the increasing lifespan of servers as “the average life of a traditional server has increased from between 3 to 4 years to 6 years for Tier 1 companies and up to 10 years for Tier 2 companies.” This explains the low server market growth. However, the cloud infrastructure market has seen tremendous growth as seen below.

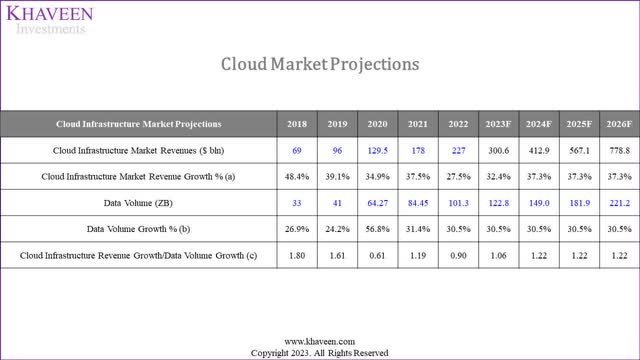

IDC, Khaveen Investments

Driven by the advent of AI, HPC, IoT and edge computing, data creation is projected to grow in the next 5 years. This would require greater cloud infrastructure to handle the increase in data. To determine the growth in cloud infrastructure revenues, we identified the factor of correlation of cloud infrastructure revenues to data volume growth over the past 10 years. – Khaveen Investments.

Despite the high cloud market growth, the server market has remained stagnant in comparison. The reason for the discrepancy between the cloud market and server markets is the increasing performance of newer servers. According to the IDC, server upgrades enable companies to have fewer but more powerful systems enabling improved performance. Despite the improved performance, server prices have declined by -3.1% per year on average. This is because the performance increase was not due to the server hardware (which encompasses the mainframe containing the infrastructure components within it), but instead the chips within the server such as CPUs and GPUs among other chips. In our previous analysis, we compared different generations of AMD and Intel server CPUs and determined the increasing performance of each new generation since 2017. Furthermore, according to The Register, the efficiency of servers increases with each new generation of hardware with higher processor utilization. Therefore, we believe the increasing performance of server systems due to better chip powering enables companies such as cloud providers to use it to increase their services without needing to scale up more server hardware.

AI, like machine learning, needs a lot of data, making data processing a big task. Tasks such as cleaning and organizing data become complex with large datasets. The training of machine learning models, particularly deep learning models containing millions of parameters, necessitates considerable computational resources. The substantial computational demands of AI underscore the need for high-performance computing.

Given that chips are responsible for increased performance that is needed in data centers utilizing AI for the computational requirements of processing the ever-increasing data volume, we believe that demand for chips will skyrocket in line with data volume growth.

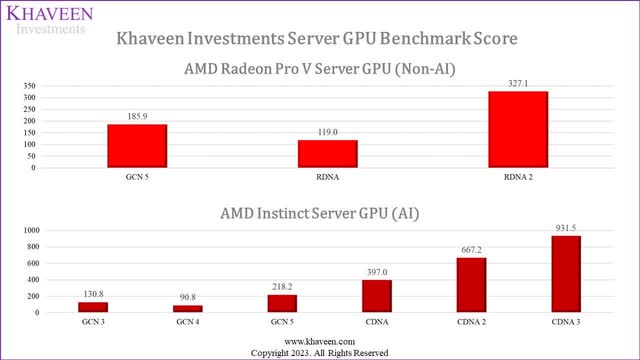

Comparing the performance of AMD’s server GPUs across different product generations (AI and non-AI), we derived a proprietary Server GPU Benchmark score encompassing various performance metrics including Texture Rate and Processing Power (FP16, 32 & 64). We obtained data from a total of 15 AMD server GPU chips encompassing its Radeon Pro V series (non-AI) and its Instinct series (AI) and categorized them based on their architecture.

TechPowerUp, Khaveen Investments

|

AMD Radeon Pro V Series Server GPU (Non-AI) |

GCN 5 |

RDNA |

RDNA 2 |

Average |

|||

|

Khaveen Investments Benchmark Score |

185.9 |

119.0 |

327.1 |

||||

|

Growth % |

-36.0% |

175.0% |

69.5% |

||||

|

AMD Instinct Server GPU (AI) |

GCN 3 |

GCN 4 |

GCN 5 |

CDNA |

CDNA 2 |

CDNA 3 |

Average |

|

Khaveen Investments Benchmark Score |

130.8 |

90.8 |

218.2 |

397.0 |

667.2 |

931.5 |

|

|

Growth % |

-30.6% |

140.4% |

82.0% |

68.1% |

39.6% |

59.9% |

|

|

Difference |

213.6% |

560.9% |

284.7% |

353.1% |

Source: TechPowerUp, Khaveen Investments.

Based on the table, AMD’s AI GPU average score is higher across all 3 generations compared to its non-AI GPUs with an average difference of 353.1%. For its Radeon Pro V Series GPUs, our RDNA average benchmark score is lower than the prior GCN 5 due to the lower average texture rate and processing power of its RDNA GPU. We compiled the average PassMark benchmark score for its RDNA PC GPUs and also found its average score lower than the prior GCN5 average by 17%.

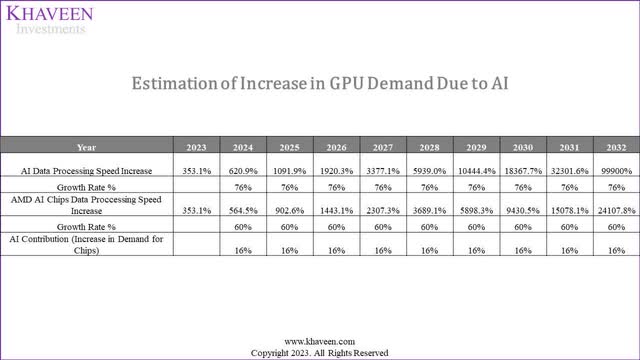

Khaveen Investments

To estimate the increase in demand for chips in terms of GPUs due to AI, we first calculated how much AI can process data faster which we based on ITBrief of a 1000x increase which equals to 99,900% that we assumed to be achieved over 10 years. Then, we calculated how much AMD AI GPUs can process data faster by comparing our derived benchmark score for AMD’s Radeon Pro V Series and Instinct generations to obtain the % difference (353.1%) which we applied its Instinct historical benchmark score growth average of 60% through 2032. Comparing the growth rate of AI Data processing speed increase of 76% with AMD AI Chips data processing speed increase of 56%, we obtain a shortfall of 16%, which implies the need for an additional 16% GPUs per year in the market required to support AI. Thus, we believe the rise of AI could boost demand for AMD serve GPUs by 16% over our baseline forecast.

AMD AI GPU Advantages

AMD claims that it has the “world’s most advanced accelerator for Generative AI.” While we recognize AMD’s prowess, we attempt to determine the validity of this ourselves by analyzing AMD’s AI GPU in comparison with its top two competitors, Intel and Nvidia.

The Instinct MI300X is “the most complex thing we’ve ever built. –CEO Lisa Su.

AMD

GPUs (Graphics Processing Units) are typically preferred over CPUs for training deep neural networks because they can perform matrix operations much faster than CPUs. – Bing Chat (GPT-4), Microsoft and OpenAI.

GPUs excel in training deep neural networks due to their ability to accelerate matrix operations, perform parallel computations, and handle large networks efficiently, thanks to their parallel architecture, ultimately speeding up the training process.

AMD

In addition, GPUs can be used for inference, which is the process of using a trained model to make predictions on new data. Inference requires less computational power than training, so it can be performed on a wider range of devices, including mobile phones and embedded systems. – Bing Chat (GPT-4), Microsoft and OpenAI.

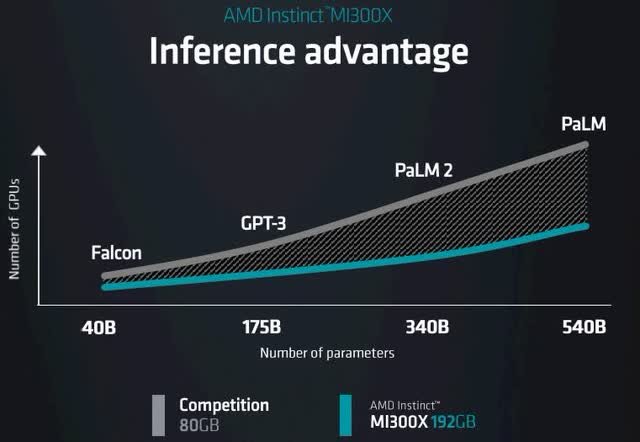

AMD claims to have an inference advantage as seen from its investor presentation slide above, where it compared its Instinct MI300x capabilities with competitors, highlighting that the number of GPUs needed across 4 LLMs is considerably lower than its competitors. However, as we can see in the following section, the competition has caught up significantly.

Performance

The table below compares the latest Data Center GPU chips of AMD, Intel and Nvidia based on various performance and informational metrics.

|

Data Center GPU Comparison Metrics |

AMD (MI300x) |

Nvidia (H100 NVL) |

Intel (Gaudi 2) |

|

Process |

5nm (TSMC) |

4nm (TSMC) |

7nm (TSMC) |

|

Transistors (‘bln’) |

153 |

160 |

>100 |

|

Engine Clock Peak |

1.700 GHz |

1.98GHz |

– |

|

FP16 Peak (Teraflops) |

1532 |

3958 |

839 |

|

INT 8 (Teraops) |

3,064 |

7,916 |

1,628 |

|

Memory Clock |

1.9GHz |

5.1GHz |

1.56Ghz |

|

Memory Capacity |

192GB HBM3 |

188GB |

96GB |

|

Memory Bandwidth |

5.2TB/sec |

7.8TB/s |

2.45TB/sec |

|

Interconnect Bandwidth |

896 GB/sec |

600GB/s |

100GB/s |

|

Max Power Consumption |

700W |

800W (2x400W) |

600W |

|

Price ($) |

$27,381 (Estimate) |

$80,000 (Estimate) |

– |

Source: Company Data, The Next Platform, VideoCardz, Intel Habana, ANAND Tech, Gaming Deputy, HPCWire, TechPowerUp, Khaveen Investments.

Based on the data in the table, starting with process technology, the AMD MI300x is built on a 5nm process from TSMC. The Nvidia H100 NVLink is fabricated on a 4nm process, which is even more advanced and efficient. On the other hand, the Intel Gaudi 2 is manufactured on a 7nm process, which is slightly less advanced than AMD and Nvidia.

Nvidia’s H100 NVLink has the highest number of transistors, with 160 bln, while AMD’s MI300x follows closely with 153bln transistors. Intel’s Gaudi 2 is reported to have more than 100 billion transistors. In terms of engine clock peak, the Nvidia H100 NVLink has the highest clock speed at 1.98 GHz, followed by the AMD MI300x at 1.7 GHz. In terms of performance, Nvidia’s H100 NVLink leads in FP16 peak performance with a whopping 3,958 teraflops. Additionally, Nvidia’s strong performance is also seen in terms of INT 8 at 7,916, twice higher than AMD.

However, when it comes to memory capacity, AMD’s MI300x offers the highest with 192GB of HBM3 memory, followed by Nvidia’s 188 GB. Intel’s Gaudi 2 has the smallest memory capacity at 96GB. Memory bandwidth is led by Nvidia’s H100 NVLink with 7.8TB/s, followed by AMD’s MI300x with 5.2TB/s and Intel’s Gaudi 2 with 2.45TB/s. Additionally, AMD’s MI300x offers the highest interconnect bandwidth at 896GB/s, while Nvidia’s H100 NVLink provides 600GB/s, and Intel’s Gaudi 2 has 100GB/s.

Nvidia’s H100 NVLink has the highest power consumption at 800W, which is not surprising given its high performance. AMD’s MI300x consumes 700W, and Intel’s Gaudi 2 is the most power-efficient at 600W.

However, Intel’s Gaudi2 is claimed to surpass Nvidia in the BridgeTower AI-based vision model benchmarks by Hugging Face at up to 2.5x faster than Nvidia’s A100 and 1.4x H100.

Full Stack Advantage

|

Data Center Portfolio |

Nvidia |

AMD |

Intel |

|

CPU |

Launching 2024 |

Yes |

Yes |

|

GPU |

Yes |

Yes |

Yes |

|

DPU |

Yes |

Yes |

Yes (IPU) |

|

Networking Interconnects |

NVLink |

Infinity Fabric |

CXL Interconnect |

|

Software |

Yes |

Yes |

Yes |

|

Integrated Hardware Solution |

Yes (DGX) |

N/A |

N/A |

Source: Company Data, Khaveen Investments.

We updated our comparison table of the data center portfolios of Nvidia, AMD and Intel. In terms of CPUs, AMD and Intel currently offer x86 server CPUs but Nvidia is launching its Arm-based CPUs in 2024. As we previously identified, we determined that AMD has the advantage in CPUs with the highest average benchmark score compared to Intel. Furthermore, all 3 companies have GPUs, which we explained above with Nvidia having the most powerful product with its 2023 new H100 NVL. All 3 companies also have DPUs and networking interconnects.

In terms of Software integrations, all 3 companies also have similar software integrations such as Hugging Face, PyTorch and Tensor Flow as AMD, Intel and Nvidia have all formed partnerships with these companies and are open-source AI frameworks. Though, in our previous analysis, we identified Nvidia as having the greatest number of AI software integrations with its own proprietary software solutions and development kits such as “over 450 NVIDIA AI libraries and software development kits to serve industries such as gaming, design, quantum computing, AI, 5G/6G, and robotics.” Furthermore, Intel also has AI software such as Intel oneAPI AI Analytics toolkit for accelerating machine learning applications.

Partnerships

We already covered earlier how AMD has established an ecosystem of partners with various levels in the value chain such as cloud players and server manufacturers. However, we discovered that this is not a specific competitive advantage that AMD has, as Intel and Nvidia also have similar partner ecosystems established.

Nvidia

As seen from the image above, Nvidia also has partnerships with server manufacturers, software partners and others. This includes the top 3 server makers.

Intel also has various partners it works with including Microsoft and Google which are among the top 3 cloud providers as well as the top 5 server makers such as Dell, HPE, Inspur, Lenovo and IBM (IBM).

Outlook

While AMD claims its GPUs as the most advanced, our analysis of its competitors shows that Nvidia and Intel also have their own legs to stand on. In terms of performance, all three companies have advantages in certain areas. It would be precipitous to label one company as the clear winner. We believe each company has made tremendous leaps in innovation and has merits to claim its competitive place in the market. Regarding having a full-stack advantage, all three companies again have established a similar portfolio of products. Even the software and hardware integrations used to link these different chips are on par. While Nvidia is the slowest to market in CPUs (launching in 2024), it has a tremendous lead in the market for GPU share and also has the most AI software integrations.

With these 3 companies dominating the entire GPU market, it is not surprising to see their partner ecosystems are also similar with all three ingrained with the same companies across the value chain. We believe all three companies are competitive in their own right, and we withhold on assigning a competitive factor scoring on any one company, considering each to be on par.

AMD Data Center Outlook

We have previously covered AMD’s data center market. While AMD does not break down the data center segment by type of chip, we were able to estimate the breakdown ourselves through numerous calculations as we previously explained.

|

AMD Revenue Breakdown ($ mln) |

2020 |

2021 |

2022 |

|

Data Center CPU Estimate |

1,153 |

2,939 |

4,131 |

|

Growth % |

155.0% |

40.6% |

|

|

Data Center GPU Estimate |

532 |

755 |

1,040 |

|

Growth % |

41.8% |

37.7% |

|

|

Data Center DPU Estimate |

655.2 |

||

|

Data Center FPGA Estimate (Xilinx) |

217 |

||

|

Total Data Center |

1,685 |

3,694 |

6,043 |

|

Growth % |

119.2% |

63.6% |

Source: Company Data, Khaveen Investments.

Revenue Model

We had previously covered Nvidia and determined its unique subscription-based model for its DGX. Nvidia’s DGX platform is are powerful system that is a combination of NVIDIA’s products such as its H100 data center GPUs as well as well as other chips such as CPUs from AMD and Intel with software such as Nvidia AI Enterprise Software featuring its pre-trained AI models and optimized frameworks. The Nvidia DGX costs between $200,000 to $400,000 for a single unit. Additionally, Nvidia also provides a subscription model for its DGX SuperPods which has 4 DGX servers for a subscription price of $90,000 per month. In comparison, one Nvidia’s A100 server GPU has a price of $10,000.

AMD’s EPYC 7742 server CPU has a product pricing of $6,950 while its MI225 server GPU was reported to be priced at $16,500 for the Japanese market. Additionally, Intel’s Xeon Platinum 8180 server CPU has a recommended pricing of $10,000 each.

AMD

While the specific prices are not provided in the comparison, Nvidia’s H100 NVLink is estimated to be much more expensive than AMD’s.

Market Share

Data Center GPU

|

Data Center GPU Share Estimate |

2020 |

2021 |

2022 |

|

Nvidia Revenue ($ mln) |

6,696 |

10,613 |

15,005 |

|

Nvidia % share |

92.6% |

88.6% |

88.9% |

|

AMD Revenue ($ mln) |

532 |

755 |

1,040 |

|

AMD % share |

7.4% |

6.3% |

6.2% |

|

Intel Revenue ($ mln) |

605 |

836 |

|

|

Intel % share |

0.0% |

5.0% |

5.0% |

Source: Company Data, JPR, Khaveen Investments.

Based on our server GPU market share table above, Nvidia had dominated the market in the past 3 years with almost 89% share in 2022, followed by AMD in second place with a fairly stable market share in the past 2 years. In 2021, Intel launched its Ponte Vecchio data center GPU and took share from Nvidia and AMD that year and remained stable in 2022.

Data Center CPU Market

PassMark, Khaveen Investments

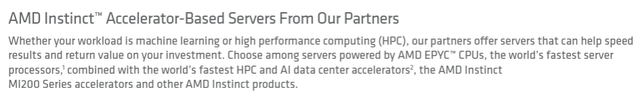

In the past 10 years, Intel has dominated the server CPU market with over 90% of the market share. However, since 2021, AMD has gained market share at the expense of Intel but remained much smaller compared to Intel.

FPGA Market

PassMark, Khaveen Investments

In our FPGA market share, Xilinx dominates the market with a 53% market share with Intel trailing behind at 26.7% in 2022. The companies’ market share has been stable in the past 2 years.

Outlook

As we established earlier, our stance regarding the competitiveness of the three companies in the server GPU market is that they are all on par. The market shares of the companies over the past three years seem to corroborate this as all have been relatively stable. We believe their market share will continue to remain stable moving forward. Similarly, for FPGA and DPU we expect market shares to remain stable, which is what we factored into our projections. For CPUs, Intel managed to regain some share in the server CPU market between Q1 to Q3 2023. We believe this is due to the launch of Xeon Sapphire Rapids which we identified delivered a significant performance improvement compared to its previous gen. However, we still expect AMD to slightly gain market share moving forward based on our previous analysis in determining its competitive edge in CPU servers over Intel.

Risk: Competition in AI Chips

Alexander Thamm, Khaveen Investments

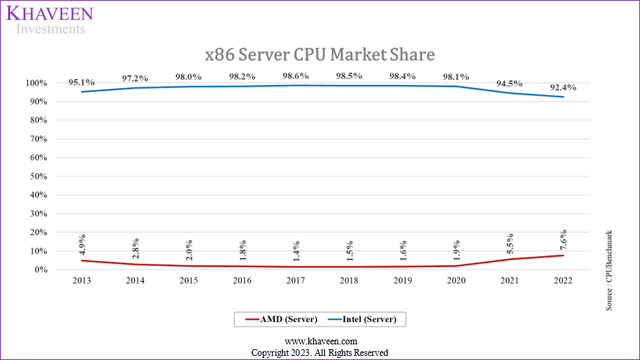

While AMD, Intel, and Nvidia are the clear and established chipmakers in the market and at the forefront of AI, we believe AI has opened up the doors for other companies to tap into the market or enhance their in-house production. As we identified above, companies like Google have developed AI accelerators for internal use, which reduced their reliance on chipmakers. Other companies like IBM, Cerebras Systems, Graphcore, Hailo, and Groq have also developed AI processors that could eat into the chip processor markets of AMD, Intel and Nvidia.

Valuation

|

AMD Revenue Forecast ($ mln) |

2022 |

2023F |

2024F |

2025F |

|

Client (PC CPU) |

6,201 |

4,253 |

5,681 |

6,491 |

|

Growth % |

-10.0% |

-31.4% |

33.56% |

14.27% |

|

Gaming Console Estimate |

5,740 |

6,371 |

7,001 |

7,632 |

|

Growth % |

81.53% |

10.99% |

9.90% |

9.01% |

|

Gaming (PC GPU) Estimate |

1,065 |

964 |

1,156 |

1,281 |

|

Growth % |

-56.4% |

-9.5% |

19.9% |

10.8% |

|

Data Center CPU Estimate |

4,131 |

3,121 |

4,880 |

6,606 |

|

Growth % |

40.57% |

-24.44% |

56.34% |

35.37% |

|

Data Center GPU Estimate |

1,040 |

2,000 |

3,067 |

4,703 |

|

Growth % |

37.69% |

92.4% |

53.3% |

53.3% |

|

Data Center DPU Estimate |

217 |

275 |

344 |

423 |

|

Growth % |

26.9% |

24.9% |

22.9% |

|

|

Data Center FPGA Estimate |

655.2 |

751 |

860 |

986 |

|

Growth % |

14.60% |

14.60% |

14.60% |

|

|

Embedded |

4,552 |

5,217 |

5,978 |

6,851 |

|

Growth % |

14.60% |

14.60% |

14.60% |

|

|

Total |

23,601 |

22,952 |

28,967 |

34,973 |

|

Growth % |

43.6% |

-2.8% |

26.2% |

20.7% |

Source: Company Data, Khaveen Investments.

We updated our revenue projections for AMD by its segment breakdown. For the Client segment, we prorated its Q1 to Q3 revenues to estimate a decline of 31.4% for the full year. Whereas for its Gaming GPU segment, we updated our projections for shipment growth taking into account our PC market growth forecast of -11.7% before recovering in 2024.

For its Data Center segment, we updated our 2023 revenue projections by CPU, GPU, DPU, and FPGA revenue estimates. For Data Center GPU, we based 2023 on management guidance of $2 bln in revenues from its latest earnings briefing and based our forecast in 2024 on our cloud market growth projections at an average of 37.3% and factored in an increase of 16% which we derived based on our estimate of the increase of chips demanded for AI. For the remaining Data Center FPGA and DPU as well as Embedded segments, we based its growth of the FPGA and DPU market forecast CAGR. For Data Center CPU revenue estimates in 2023, we prorated its Q1 to Q3 total Data Center revenues and subtracted our estimates of its GPU, FPGA and DPU revenues but recovering in 2024 based on our previous projections of its shipment and ASP growth.

Khaveen Investments

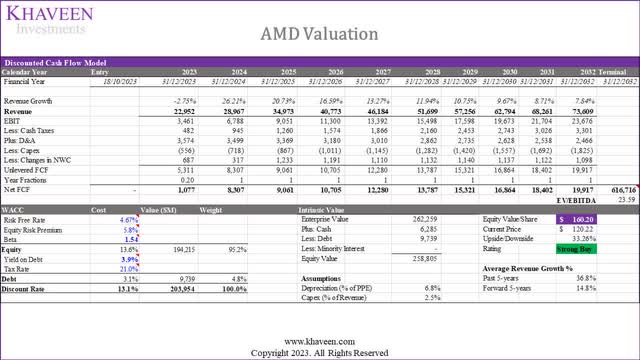

We valued the company with a discounted cash flow (“DCF”) valuation as the company’s FCF margins have improved significantly over the past years with an FCF margin of 20.4% in the past 2 years. Based on a discount rate of 13.1% (company’s WACC), we derived an upside of 33.3% from our DCF model with its terminal value based on the U.S.-only average chipmaker EV/EBITDA of 23.59x.

Verdict

In conclusion, we believe AMD’s strategies, encompassing a broad product portfolio for AI, an open software platform for AI, and established partnerships, position the company as a significant player in the rapidly growing field of artificial intelligence. The computational demands of AI, especially in data-intensive tasks like machine learning, highlight the crucial role of high-performance chips. AMD’s focus on GPUs, along with its competitors Nvidia and Intel, underscores the industry-wide recognition of the importance of advanced chip technology in meeting the escalating demands of AI applications.

While AMD claims superiority in GPU technology, our analysis reveals that Nvidia and Intel also exhibit strengths in various aspects. The competitive environment is fluid, with each company showcasing innovation and expertise, thus we believe it would be premature to declare a clear winner, as all three companies contribute significantly to the AI hardware market. Their diverse strengths, encompassing hardware, software, and integration capabilities, make them formidable contenders.

Examining market dynamics, we anticipate a stable market share for AMD, Nvidia, and Intel in the GPU sector. Despite differences in launch timelines and specific strengths, the overall competitive landscape remains balanced. The similarity in partner ecosystems and market shares across the GPU, FPGA, and DPU segments suggests a collective dominance by these three companies.

Consequently, we believe that moving forward, the market shares for AMD, Nvidia, and Intel will remain stable, in line with the projected growth in AI and data-intensive computing applications. We revised our company valuation using a DCF approach, taking into account the substantial improvement in its margins, averaging 20.4% over the past two years. Consequently, we set a higher price target of $160.20 and maintain our Strong Buy rating for the company.

Read the full article here