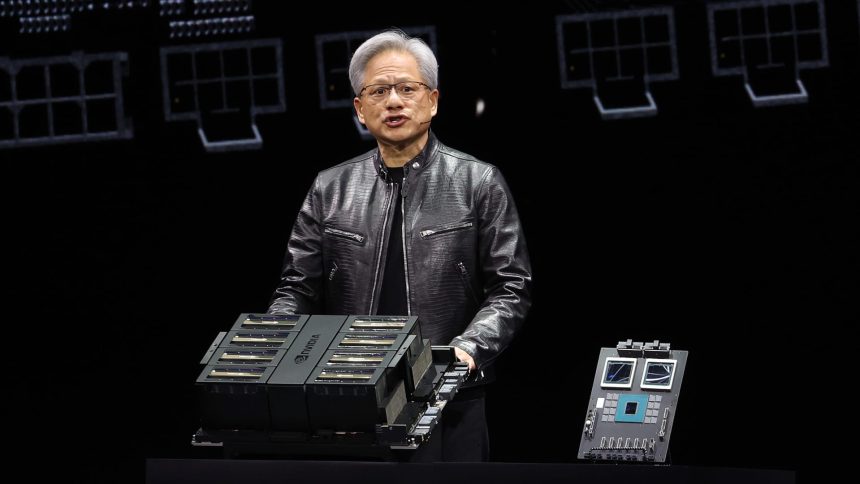

When Nvidia CEO Jensen Huang took the stage Monday at the chipmaker’s hyped-up artificial intelligence conference, he joked to the thousands of attendees packed into the arena that this was “not a concert.” In a literal sense, Huang was right. But that didn’t stop him from using his two-hour keynote address to cement Nvidia’s status as the generative AI bandleader, delivering hardware and software product announcements, including its anticipated next-generation Blackwell AI chip — and presenting why the chipmaker has become so dominant in the AI market. To our ears, Nvidia’s loudest instrument Monday was its growing lineup of software offerings, which is central to our confidence in this “own it, don’t trade it” stock. That designation used to belong to only Apple , a company Nvidia has taken a page from. Just as Apple has become a company driven by its software and hardware paired together to create an ecosystem, Nvidia is in the midst of a similar transformation. Huang’s keynote speech at the long-awaited GTC conference made that clear. “It’s not just a chip. It is a platform. Now what does that mean? It means it’s filled with software. What does that mean? It’s recurring revenue; it’s not one-off,” Jim Cramer said Tuesday. “You get into [Nvidia’s] ecosystem; you stay in their ecosystem. There are a lot of people who say it’s a defensive move. I say it’s an offensive move.” Shares of Nvidia rose about 0.4% Tuesday afternoon, to around $888 apiece, shaking off earlier losses in the session. NVDA 1Y mountain Nvidia’s stock performance over the past 12 months. The latest step in the chipmaker’s journey beyond hardware is the introduction of Nvidia Inference Microservices. So-called NIMs are prebuilt software packages that developers can use to speed up the development timeline for AI applications, such as copilot tools, using their companies’ data and compute infrastructure. NIMs will be included in Nvidia’s software subscription for businesses, called AI Enterprise. The goal of NIMs is to make it easier for companies to complete the process of taking existing AI models and deploying them into useful applications, thus driving the adoption and growth of Nvidia’s AI services offerings. Nvidia said ServiceNow , which makes workplace automation software, is one customer signed up to use NIMs. NIMs are part of Nvidia’s increasingly important software and services business, which reached an annualized revenue rate of $1 billion in the three months ended in January. That is small, considering its full-year sales swelled to $61 billion. But it is growing fast, with a lot of runway. Other Nvidia software and services include DGX Cloud, a supercomputer accessible through a web browser. Drugmaker Amgen uses DGX Cloud, according to Nvidia. “Everything that we do starts with software,” Huang told Jim in an interview Tuesday. Nvidia’s announcements at GTC came at a critical juncture for the company, as the generative AI boom sparked in late 2022 by the launch of ChatGPT is firmly in its second year and questions swirl about the sustainability of investment into the technology and, by extension, Nvidia’s growth. Its sales more than doubled in its fiscal year ended in January, while adjusted profit nearly quadrupled. Shares of Nvidia are up about 500% since the start of 2023, including nearly 80% so far this year. At the same time, Nvidia is facing mounting competition from large customers who are now designing their own AI processors, namely Microsoft and Meta Platforms , and rival semiconductor firms such as Advanced Micro Devices , which late last year started shipping its most advanced chip to challenge Nvidia to date, called the MI300X. Nvidia’s strong standing in the competitive landscape is fortified by its ability to offer high-quality products for all different AI computing needs: hardware, networking components to tie all the chips together within data centers and software tools needed to build the applications. In the hardware race, Nvidia on Monday once again upped the stakes with the release of its highly anticipated Blackwell chip architecture, which delivers a host of performance improvements compared with its predecessor Hopper on which the immensely popular H100 chip is based. Specifically, Nvidia said Blackwell systems offer up to an 25 times improvement in total cost of ownership and energy consumption. Additionally, the new architecture can perform up to four times as fast in training AI models compared with Hopper-based systems, according to Nvidia, while also speeding up inference tasks — essentially the day-to-day use of models — by 30 times. The improved performance on inference is notable, given Nvidia’s earnings report last month revealed its chips have played a bigger role in inference than many on Wall Street expected. Nvidia’s Blackwell offerings include the GB200 chip, which is two Blackwell-based graphics processing units (GPUs) paired with another kind of processor called a central processing unit (CPU). In his interview with Jim on Tuesday, Huang estimated that one GB200 chip could cost between $30,000 to $40,000. Nvidia also unveiled an entire data center server rack called the GB200 NVL72. The latter is 36 GB200 chips — meaning 36 CPUs and 72 GPUs — connected via Nvidia’s improved networking components. Early adopters of the new Blackwell architecture include Amazon’ s Web Services, Dell Technologies , Alphabet ‘s Google, Meta Platforms, Microsoft, ChatGPT creator OpenAI, Oracle and Tesla , according to Nvidia. On Monday, Huang said Nvidia expects Blackwell to be “most successful” product launch in its history, touting Blackwell as “a platform” for AI computing. For example, Huang said a customer currently working on Hopper generation hardware can simply swap in the new Blackwell hardware into their server racks. While that’s an important consideration for those looking to upgrade to the latest and greatest, it also reflects how much deeper Nvidia’s competitive moat stands to get. With Nvidia advancing its Apple-like transformation with new services like NIMs, customers working on its hardware and utilizing its software are going to become even more deeply embedded in the ecosystems than ever before. Wall Street analysts were generally upbeat on Nvidia’s slate of GTC announcements, even though there were not any major surprises. Goldman Sachs raised its price target on Nvidia to $1,000 a share from $875, with analysts saying they had a “renewed appreciation” for the company’s competition in the AI race. Elsewhere, JPMorgan analysts argued Nvidia “continues to be 1-2 steps ahead of its competitors.” (Jim Cramer’s Charitable Trust is long NVDA, MSFT, META, AAPL and AMZN. See here for a full list of the stocks.) As a subscriber to the CNBC Investing Club with Jim Cramer, you will receive a trade alert before Jim makes a trade. Jim waits 45 minutes after sending a trade alert before buying or selling a stock in his charitable trust’s portfolio. If Jim has talked about a stock on CNBC TV, he waits 72 hours after issuing the trade alert before executing the trade. THE ABOVE INVESTING CLUB INFORMATION IS SUBJECT TO OUR TERMS AND CONDITIONS AND PRIVACY POLICY , TOGETHER WITH OUR DISCLAIMER . NO FIDUCIARY OBLIGATION OR DUTY EXISTS, OR IS CREATED, BY VIRTUE OF YOUR RECEIPT OF ANY INFORMATION PROVIDED IN CONNECTION WITH THE INVESTING CLUB. NO SPECIFIC OUTCOME OR PROFIT IS GUARANTEED.

When Nvidia CEO Jensen Huang took the stage Monday at the chipmaker’s hyped-up artificial intelligence conference, he joked to the thousands of attendees packed into the arena that this was “not a concert.”

Read the full article here

News Room